In the evolving field of large language models (LLMs), understanding file naming conventions is essential for developers and researchers. Each LLM file name reveals crucial information about model version, parameter count, intended purpose, quantization level, and file format. This guide dives into the common naming patterns, such as .gguf, .onnx, and .pt formats, as well as quantization markers like Q4 and FP16. By decoding these labels, users can better navigate LLM libraries and select models optimized for specific tasks or deployment needs.

Category: AI

Enhance your fuzzy name search accuracy and flexibility by combining traditional methods with Generative AI. By following my step-by-step implementation, you can create…

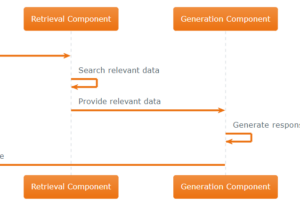

Key Components of Retriever-Augmented Generation (RAG) Retriever and Generator, the key two components, in Retriever-Augmented Generation (RAG) models are fundamentally designed to enhance…

Retriever-Augmented Generation (RAG) addresses several limitations present in traditional natural language processing (NLP) models, making it a necessary evolution in the quest for more accurate, relevant, and contextually rich text generation