Breaking Barriers: Using Google’s “Teachable Machine” No Code Machine Learning Approach for Sign Language Recognition and Learning – Step by Step Experiment

Table of Contents

Barriers for Sign Language Learning

Learning sign language presents unique challenges that necessitate innovative solutions to make the educational journey more effective and inclusive. One of the primary hurdles faced by individuals learning sign language is the imperative need for visual and interactive resources. Traditional methods often fall short in providing the dynamic and immersive learning experiences required for grasping the nuances of sign language gestures. Visual aids play a crucial role in understanding the spatial and gestural elements inherent in sign language, and interactive tools can facilitate active engagement and practice.

Moreover, the accessibility of traditional sign language learning methods is often limited, contributing to a gap in the availability of quality educational resources. Many individuals, especially those in remote or underserved areas, may face challenges in accessing specialized sign language courses or finding proficient instructors. This limitation exacerbates the struggle for those eager to learn sign language, hindering their ability to fully engage with and comprehend this unique mode of communication.

Google’s No-Code Machine Learning Approach

Teachable Machine, a web-based tool developed by Google, occupies a pivotal role in the landscape of machine learning education. It serves not only as a sophisticated machine learning model training tool but also as an educational platform, enabling users to explore and experiment with machine learning concepts without the traditional barriers of coding expertise. As we embark on a journey to understand its profound impact on sign language learning, it is essential to comprehend its overarching role and significance in the broader context of machine learning education.

In essence, Teachable Machine provides a user-friendly interface that allows individuals, regardless of their programming background, to train machine learning models with ease. Its “no-code” approach marks a paradigm shift, making machine learning accessible to a wider audience. This democratization of machine learning is particularly crucial in breaking down barriers and fostering inclusivity, allowing individuals from diverse backgrounds to engage with and contribute to the field.

The transformative power of Teachable Machine’s no-code approach becomes even more apparent when considering the challenges inherent in sign language learning. Traditional methods often lack visual and interactive resources, hindering the effective acquisition of sign language gestures. Furthermore, the accessibility of quality sign language education is limited, creating disparities in learning opportunities. Teachable Machine steps into this void, providing a versatile platform that empowers individuals to not only recognize and understand sign language but also to create and share their own interactive sign language learning tools.

Using Teachable Machine is quite straightforward

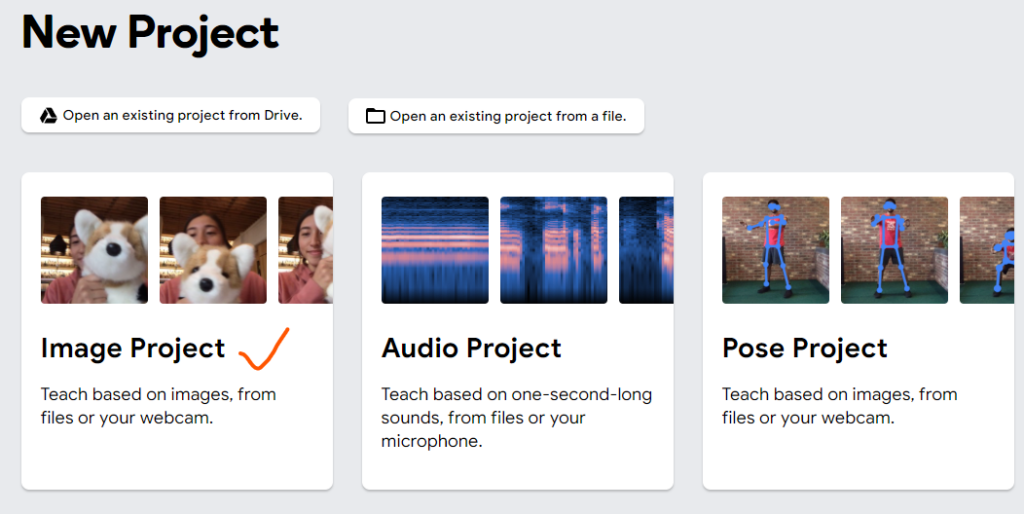

As of December 2023, Teachable Machine provides three type of projects: Image, Audio and Post. For our experiment, we will start from Image project.

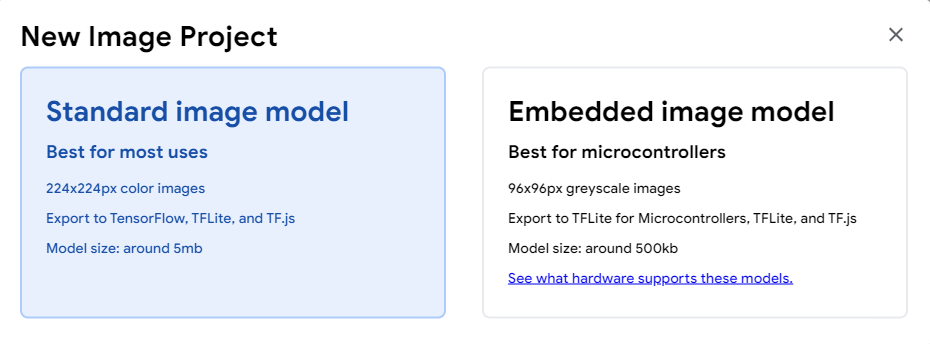

Click the panel of “Image Project”, then choose “Stand image model”, which will give us 224x224px color images with around 5MB mode size for TensorFlow, TFLite and TF.js.

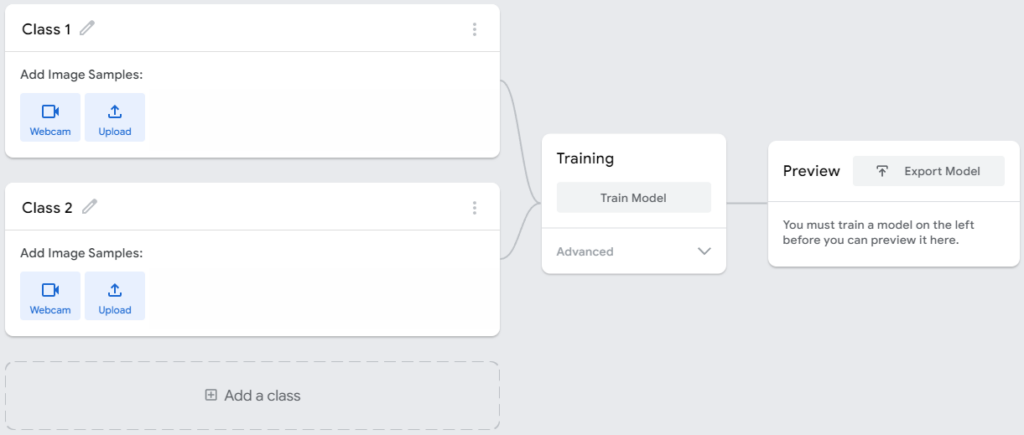

Then we will see the four main steps of using Teachable Machine:

- Gather data: gather and group the data (aka, samples) from Webcam or files into classes or categories for computer to learn

- Train: train the model based on the samples provided in Step 1

- Preview: Teachable Machine provides an instant preview to see if the model can correctly classify new samples.

- Export: you can export your model for your websites, apps and more. You also can download and host your model online for other people’s use.

Let’s Start

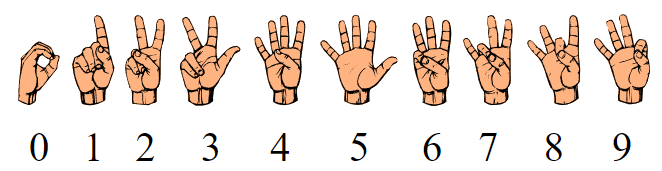

American Sign Language (ASL) 0 – 9

For this experiment, we will train our model in Teachable Machine form zero (0) to nine (9) of American Sign Language (ASL)

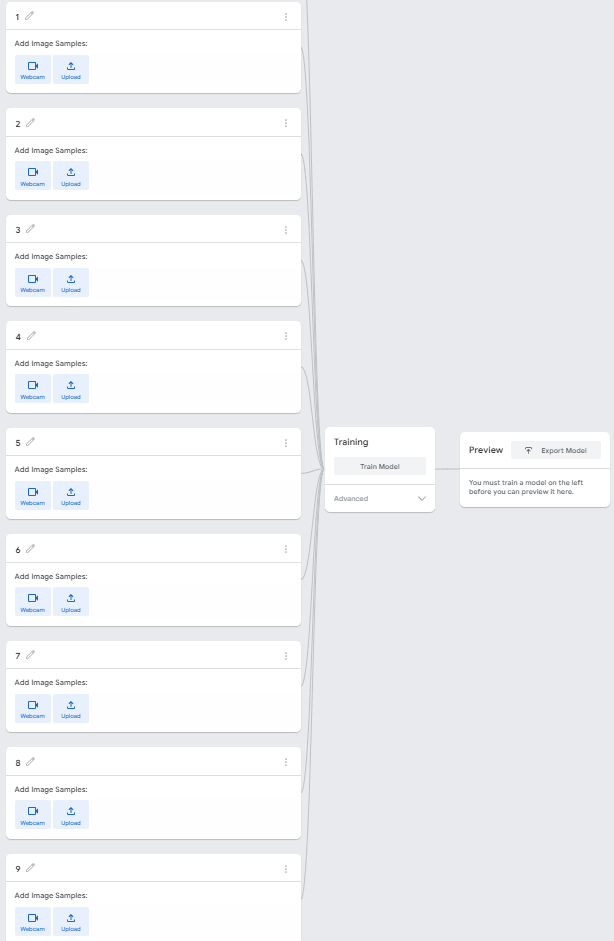

Define Classes for 0, 1, …, 9

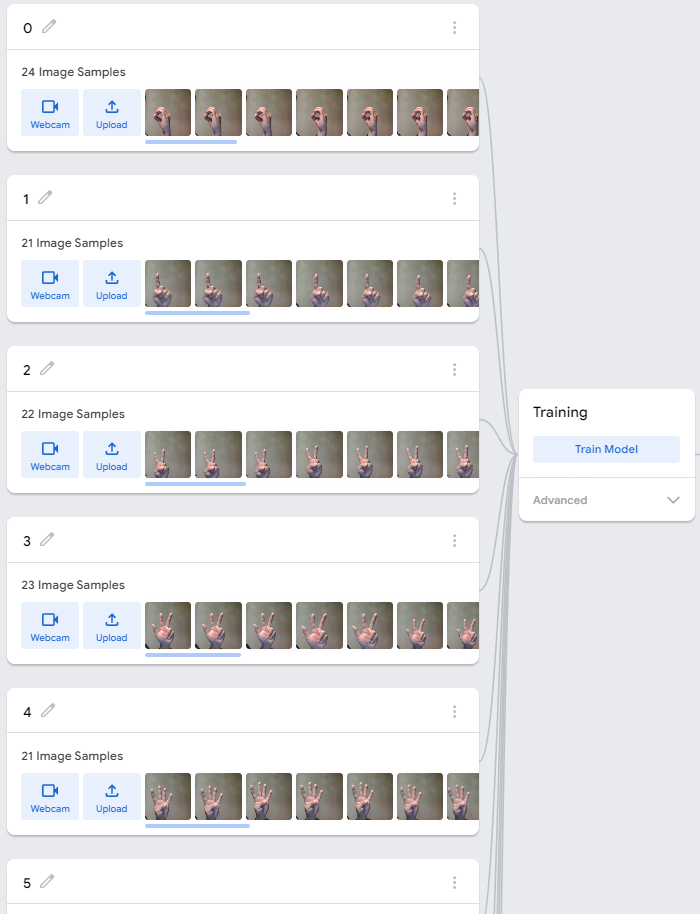

Collect Samples via Webcam

Click the Webcam icon in each class to input your live samples. Note, if it is the first time to open the Webcam on https://teachablemachine.withgoogle.com/, the browser will request your consent to open the Webcam.

Click the “Hold to Record” button to collect your samples.

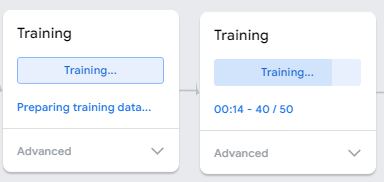

Training Model

Once samples from 0 to 9 have been gathered, initiate the model training processes by clicking on “Train Model” within the “Training” panel.

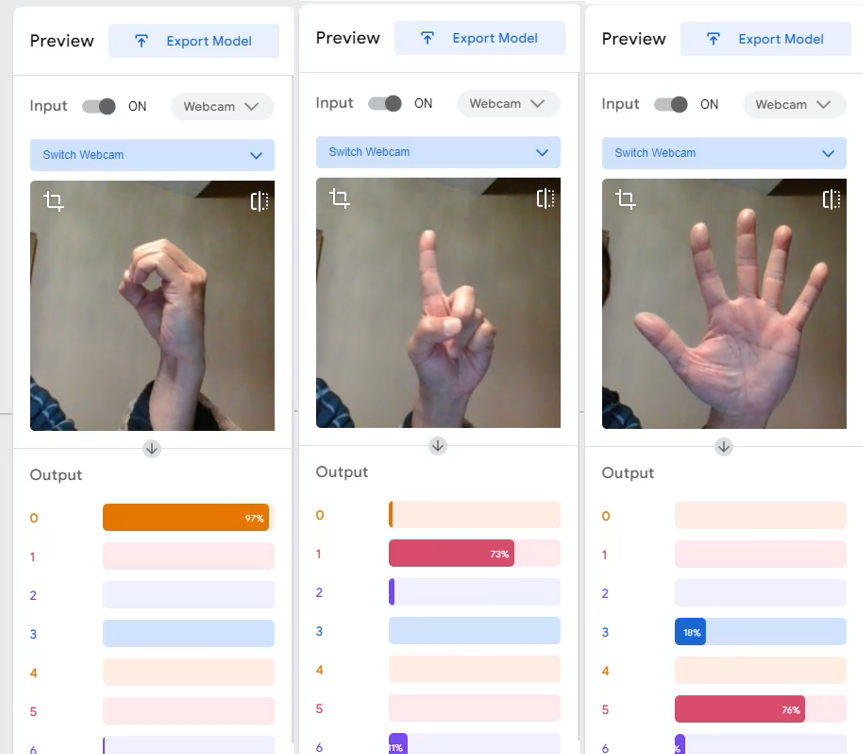

Preview and Export

After the training processes are done, the preview is instantly available for you to test if the model can recognize and classify your new samples via Webcam. If it works, you can click the “Expert Model” button to export the model for your further use; if not, you can go back the first step to re-gather your samples.

Next Steps

Testing and refining the trained model

Testing and refining the trained model is a critical phase in the Teachable Machine workflow.

- The “Test” feature becomes pivotal, allowing users to evaluate the model’s performance with new data. This step provides valuable insights into how well the model generalizes and recognizes different sign language gestures.

- Following testing, an iterative refinement process ensues, where the dataset is adjusted based on the testing results. This iterative approach helps address any inaccuracies or areas where the model may struggle.

- To enhance accuracy, users may need to repeat the training process, fine-tuning the model with additional examples and improvements gleaned from testing.

Integration into Applications

Integrating the trained model into applications is a pivotal phase for sign language recognition and learning.

- Encouraging users to share feedback for continuous improvement.

- Exploring opportunities for real-time feedback in learning environments becomes essential, showcasing the potential for dynamic, interactive experiences that enhance the educational journey for individuals learning sign language.

If you possess a greater proficiency in coding and have a keen interest in delving deeper into Machine Learning, you may want to explore another article I’ve authored titled “MLX Unleashed: A Comprehensive Exploration of Apple’s Machine Learning Framework – A Step-by-Step Introduction“.

Leave a Reply